Neural Network Math: Forward Propagation

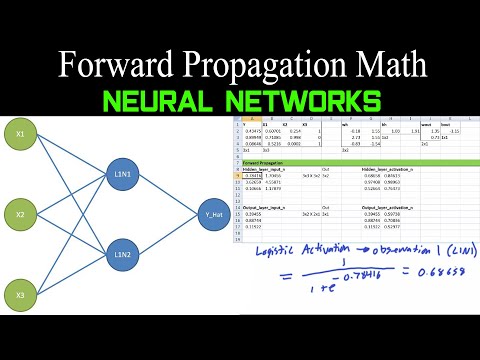

The math behind a basic neural network is not too complicated however it is important to understand how it works if you want to properly apply neural networks. In this example we will be using a logistic hidden layer activation function and a linear activation for the output layer. Matrix multiplication can be used to reduce the number of calculations. An example will be shown in Excel using matrix multiplication.

This video covers forward propagation. Another video will cover back propagation which is used to optimize the weights and biases. This is considered the supervised learning process. .

Comments are closed.