Build your LLM App without a vector database (in 30 lines of code)

We will show you how to create a responsive AI application leveraging OpenAI/Hugging Face APIs to provide natural language responses to user queries. No vector database required.

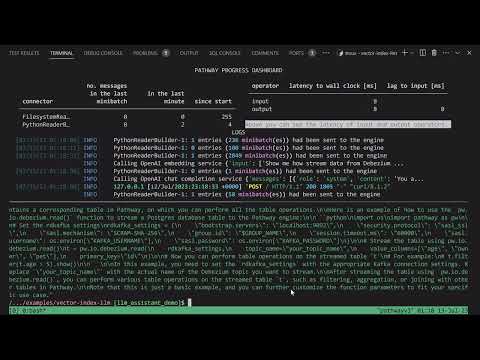

LLM App is a chatbot application which provides real-time responses to user queries, based on the freshest knowledge available in a document store. It does not require a separate vector database, and helps to avoid fragmented LLM stacks (such as Pinecone/Weaviate + Langchain + Redis + FastAPI +…). Document data lives in the place where it was stored already, and on top of this, LLM App provides a light but integrated data processing layer, which is highly performant and can be easily customized and extended. It is particularly recommended for privacy-preserving LLM applications.

Speaker bio: Jan Chorowski has developed attention models while working at Google Brain and MILA. 10k+ citations, co-author of Geoff Hinton and Yoshua Bengio. Jan is CTO at Pathway.

Try it out: https://github.com/pathwaycom/llm-app